GazeGPT and the Future of Smart Glasses

How context-based eyewear could change human interaction

Multimodal large language models (LMMs) are capable of processing and generating text, images, and sometimes audio and video – but how does this work in the context of smart glasses? According to a team of researchers from Zinn Labs, one possible application could be “GazeGPT.”

This is a proof-of-concept AI wearable that provides context-aware answers to a person’s queries by using their gaze as input. Specifically, the core premise of GazeGPT is that the most important aspect of an AI assistant is to know and identify what the asker is looking at.

We spoke to Nitish Padmanaban, Co-Founder of Zinn Labs, to learn more about the nature of their research, the challenges in the field, and what the future could hold for intelligent eyewear.

What is GazeGPT?

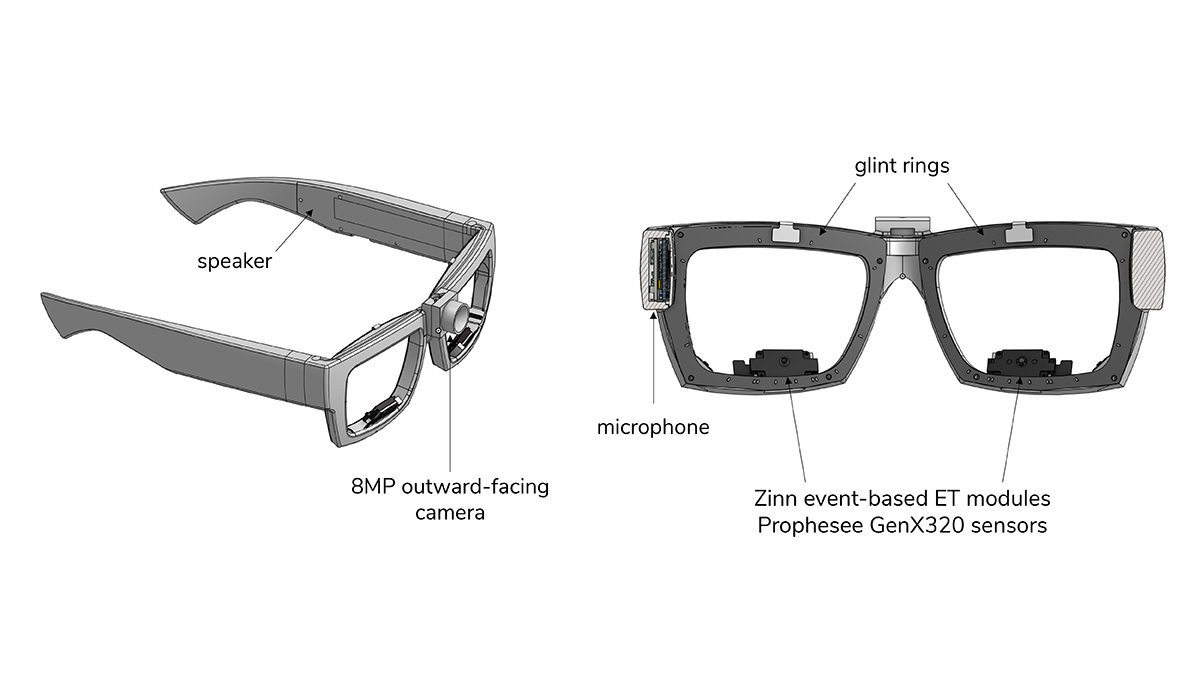

We wanted to create something that could provide an upstream service, such as ChatGPT, with contextual information. To achieve this, we equipped a wearable device with a world-facing camera and eye-tracking sensors to provide images of the user’s object of interest alongside their query. These images allow the user to ask straightforward, natural questions about their surroundings and receive well-informed answers in return.

For example, if you were looking at a restaurant menu in a foreign language, you could ask “what are the ingredients in this dish?” and GazeGPT can use context to describe the exact menu item you looked at, with no pronouncing or spelling esoteric names required.

Why are you personally interested in this field?

As a team we’ve always been focused on using technology to enhance the human experience. Previously, we used gaze tracking technology to make automatically refocusing eyeglasses, but this work is farther out than we’d like. Smart eyewear, in my opinion, fits into the same broad category of tools but focuses on knowledge as opposed to health.

What are the limitations of current smart accessories?

Though we think this space holds a lot of future potential, there are several areas of improvement in both hardware and software. For the former, weight and battery life are major concerns, and these in some ways work directly against each other. For the latter, latency and response quality are still lacking, but LMMs are likely to improve rapidly on one or both fronts.

What are the challenges in designing interfaces between LMMs and humans?

In many ways, LMMs simplify the task of creating an interface between technology and people. The ability to use natural language and images means that there is less friction for a user than ever before – you don’t even need to learn how to use a keyboard. The real challenge lies in identifying which aspects of input and output that we currently take for granted may no longer fit into a world with LMMs.

What were the main findings of your study?

Our study was focused on showing that gaze can enhance the context for questions asked of an LMM. We determined that, compared to other ways of pairing an image with a query (for example, an image from a bodycam or assuming the wearer’s head is pointed directly at the object of interest), using gaze to select an object in the world camera view was categorically better. In fact, people were able to make selections faster and more accurately, while also maintaining the intuitiveness of the interface.

What does your next phase of research involve?

For the future, we plan on improving the functionality of both our hardware and eye tracking systems. One of our current priorities is to miniaturize the components and reduce the computational requirements of the eye tracker. Both are important to be able to design an all-day wearable in terms of battery life, comfort, and fashion. Battery life follows directly from more efficient computation. Less weight from smaller components and less heat from energy use both feed into comfort. And, for use in an actual product, the eye trackers have to be small enough to hide in the frames.

How do you envision the future of smart glasses in society?

We think that smart glasses are the ideal form factor for an intelligent wearable. It is the best vantage point from which to see everything the user sees and, therefore, the best possible context for the AI. Eyeglasses are also common enough in society that the form factor is acceptable to use outside.

As LMMs and related AI technologies evolve, I believe smart glasses will provide easier access to the breadth of human knowledge, without the inevitable distraction that smartphone-use falls prey to.

The New Optometrist Newsletter

Permission Statement

By opting-in, you agree to receive email communications from The New Optometrist. You will stay up-to-date with optometry content, news, events and sponsors information.

You can view our privacy policy here

Most Popular

Sign up to The New Optometrist Updates

Permission Statement

By opting-in, you agree to receive email communications from The New Optometrist. You will stay up-to-date with optometry content, news, events and sponsors information.

You can view our privacy policy here

Sign up to The New Optometrist Updates

Permission Statement

By opting-in, you agree to receive email communications from The New Optometrist. You will stay up-to-date with optometry content, news, events and sponsors information.

You can view our privacy policy here